The race for AI supremacy has reached new heights as xAI unveils its mammoth supercomputing facility in Memphis, setting unprecedented benchmarks in computational power and engineering innovation.

In a stunning display of technological prowess, xAI’s Colossus supercomputer cluster has emerged as the world’s largest and most powerful computing facility. Housing an initial deployment of 100k NVIDIA Hopper Tensor Core GPUs, the facility stands as a testament to rapid infrastructure development, having been constructed in just 122 days.

The facility’s architecture presents several innovative solutions to complex engineering challenges. Perhaps most notably, the implementation of Tesla Megapacks addresses the critical issue of power stability during GPU training operations. When thousands of processors simultaneously initiate training sequences, millisecond power fluctuations threatened to destabilize the entire system. xAI’s solution? A sophisticated power management system where generators charge Tesla Megapacks, which then provide consistent power delivery during intensive computational tasks.

NVIDIA’s Spectrum-X Ethernet networking platform serves as the digital nervous system of this computational giant. The platform orchestrates data movement across the vast array of processors, ensuring efficient communication between components while maintaining the low latency required for advanced AI training operations.

xAI isn’t stopping at 100k GPUs. The company has already initiated plans to double Colossus’s capacity to 200k NVIDIA Hopper GPUs, pushing the facility’s estimated value beyond $6 billion, (Elon Musk xAI Rakes In Massive $6 Billion Warchest to Take On OpenAI). This expansion positions xAI at the forefront of AI infrastructure development, potentially redefining the boundaries of machine learning capabilities.

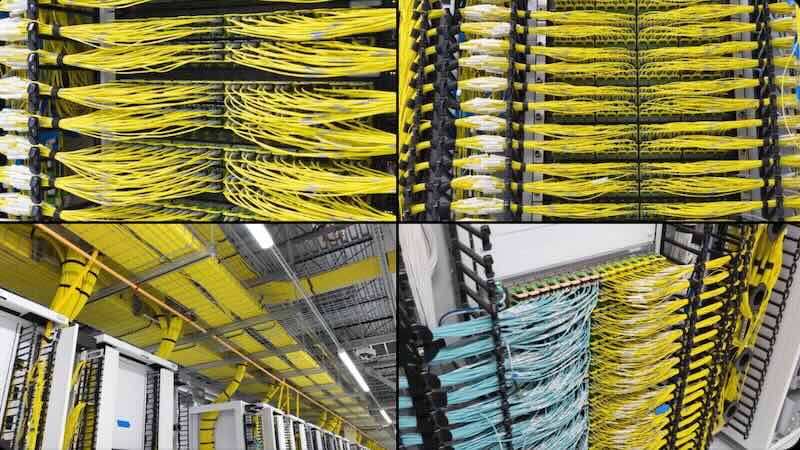

The facility’s liquid cooling system features industrial-scale pipes that manage the intense heat generated by the GPU clusters. This cooling infrastructure, combined with the meticulously organized cable management system, showcases the attention to detail in the facility’s design and implementation.

Most remarkable is the facility’s rapid deployment timeline. While similar-scale systems typically require years to complete, xAI managed to begin training operations just 19 days after installing the first rack. This achievement marks a significant shift in how large-scale AI infrastructure can be deployed and operationalized.

Related Post

Elon Musk xAI Unveils Colossus: World’s Most Powerful AI Cluster?

xAI Launches Grok API: Developer Access to Advanced AI Model

xAI Grok-2 and Grok-2 mini: The New Frontiers of AI Assistants