This week, Tesla flipped the switch on a new massive 10,000 unit Nvidia H100 GPU cluster to turbocharge its AI training for Tesla FSD end to end training Driving development (Elon Musk finally livestreamed Tesla FSD beta V12 on X). The cutting-edge infrastructure provides a huge boost in compute power right before Tesla’s own Dojo AI supercomputer comes online.

Why Tesla Needs More Training Compute

As Tesla gathers more real-world driving footage, its neural net training demands grow exponentially. FSD beta testing already provides 1 billion miles of data per year. Analyzing this massive video dataset requires advanced parallel processing at scale.

Additional training power lets Tesla iterate faster on autonomous driving models. More complex neural networks and larger batch sizes produce higher accuracy. The new 10k GPU cluster future-proofs training as the video dataset size balloons.

Nvidia H100 GPUs – Optimized for AI Workloads

The brand new Nvidia H100 GPUs are purpose-built for AI tasks. They deliver up to 30x the performance of previous gen A100 GPUs that Tesla already uses.

Key Nvidia H100 GPUs specs:

- 18,432 CUDA cores

- 640 tensor cores

- 80 streaming multiprocessors

Nvidia says the H100 handles AI training workloads 9x faster than the A100. For Tesla’s usage, the 5x speedup for high performance computing is also significant.

Why Tesla Still Needs Dojo supercomputer

Despite the massive upgrade, GPU supply shortages have throttled Tesla’s access to Nvidia hardware. Even with the new cluster, Tesla requires more capacity.

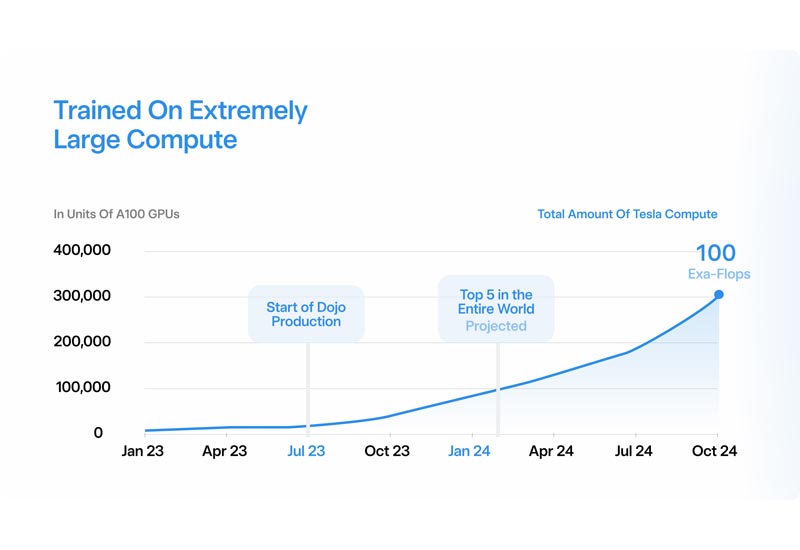

This demand gap led Tesla to develop its own Tesla Dojo Supercomputer powered by internally-designed AI chips. When fully built out, Dojo will be among the world’s most powerful AI systems.

Dojo’s Mind-Blowing AI Performance

Elon Musk has stated Dojo will reach 1,000 petaflops of processing power. By comparison, the top supercomputer today maxes out at 442 petaflops.

In the AI domain of INT8 computing specifically, Dojo is projected to achieve over 1 exaflop (1,000 petaflops!). This would cement it as the undisputed AI training leader globally.

With the new 10k H100 GPU cluster now activated and Dojo on the horizon, Tesla has fundamentally solved its need for more training power.

Within a year, Tesla may operate AI infrastructure rivaling small nations in capability. The company is ramping total training compute spend past $2 billion annually through 2024.

This virtually limitless resource can scale FSD V12 end to end training development beyond what competitors can muster, Elon Musk’s FSD Beta v12 Demo Showcases Major AI Breakthrough for Self-Driving. Tesla’s insatiable appetite for data combined with unmatched processing power will likely fuel technology breakthroughs in efficient neural nets.

While Expanding the training fleet and video dataset remain vital, Tesla’s surging computational firepower promises to unlock autonomous driving’s full potential.